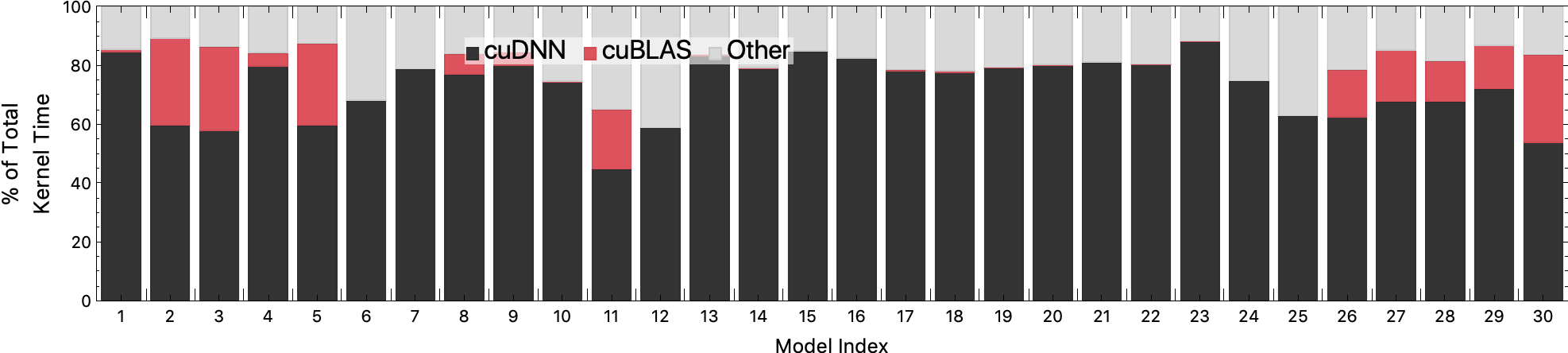

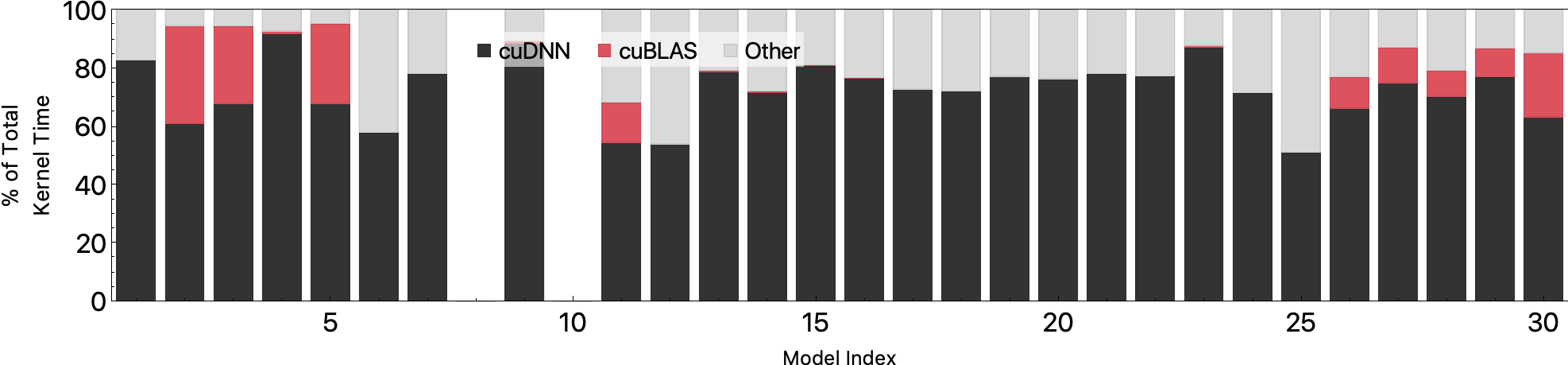

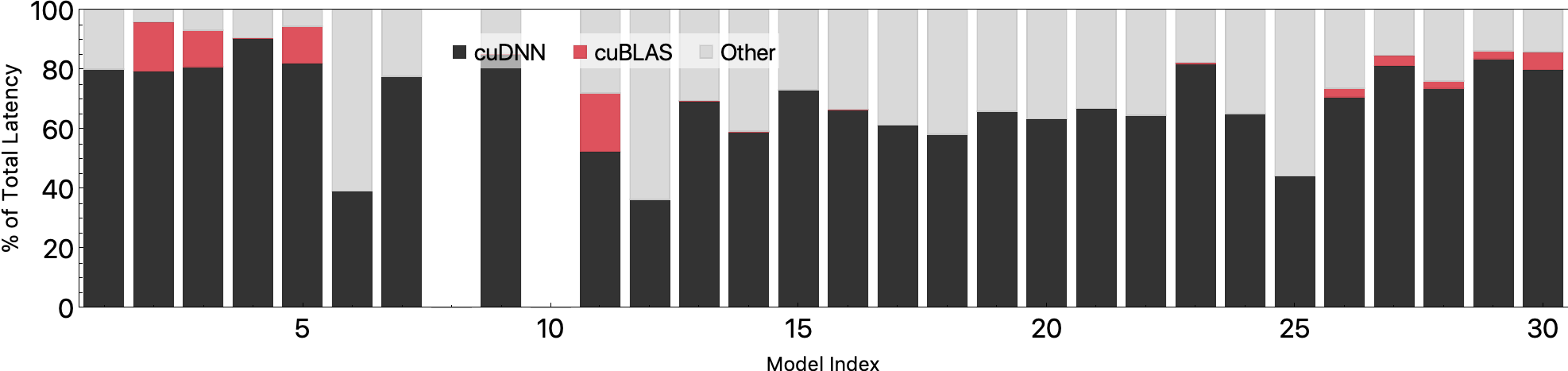

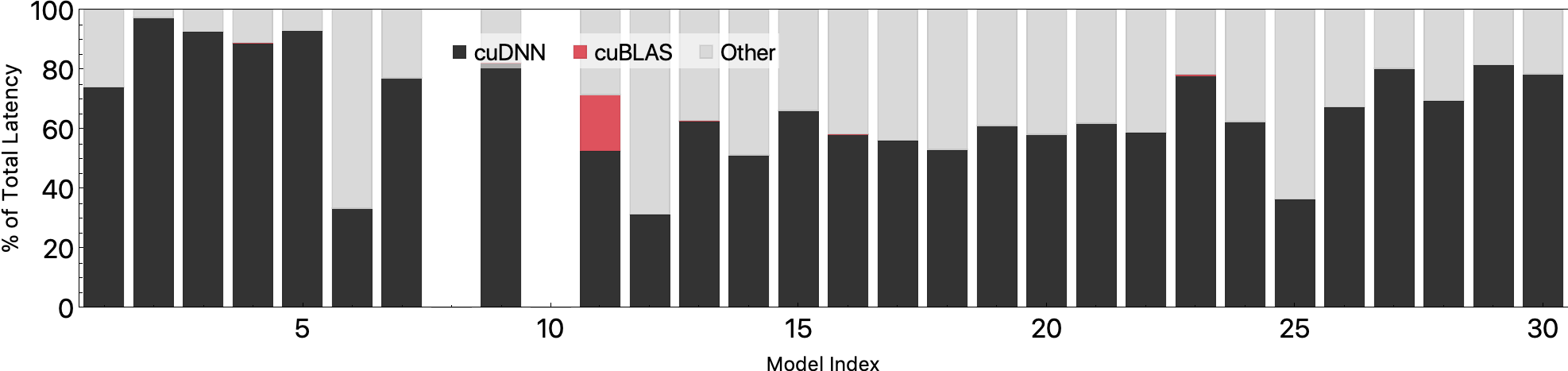

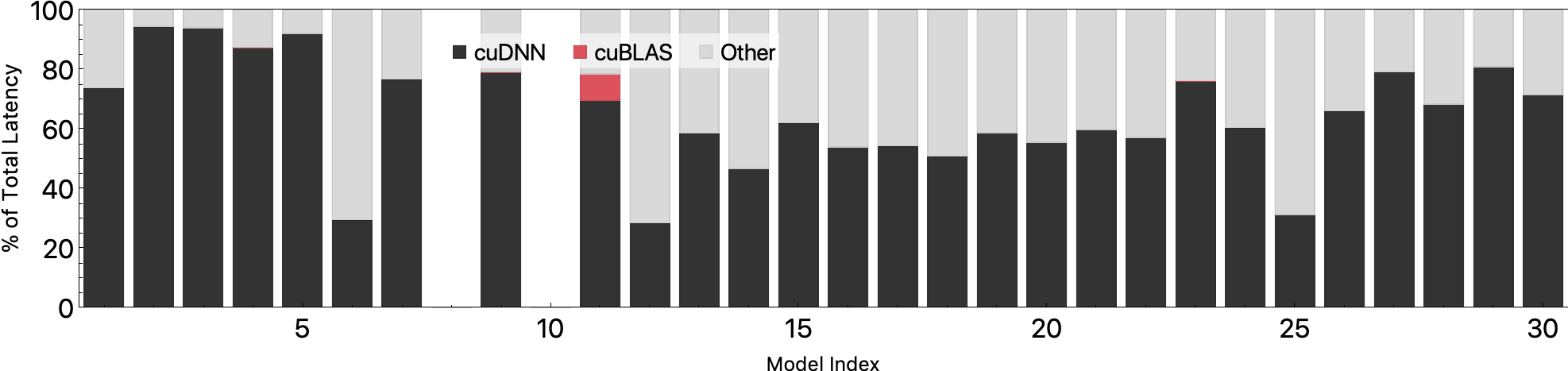

Performance Breakdown

We evaluate the performance of MXNet on a Tesla_V100-SXM2-16GB and classify the end-to-end performance based on whether they fall into CUDNN, CUBLAS, or other calls. As shown bellow, both CUBLAS and CUDNN dominate the end-to-end inference latency.