Layer Information

Benanza affords you a few ways to examine the topological structure (or architecture) of models. This section describes the base case, where given a model it prints out all the layers. Subsequent sections describe how to get the layer shape information and layer statistics across models from the tool.

Theoretical Flops

Users can get the theoretical flops of a model by using the flopsinfo command.

The command allows one to compute the theoretical flops for each layer within the network.

benanza flopsinfo -h

Get flops information about the model

Usage:

benanza flopsinfo [flags]

Aliases:

flopsinfo, flops

Flags:

-h, --help help for flopsinfo

Global Flags:

-b, --batch_size int batch size (default 1)

-f, --format string print format to use (default "automatic")

--full print all information about the layers

--human print flops in human form

-d, --model_dir string model directory

-p, --model_path string path to the model prototxt file

--no_header show header labels for output

-o, --output_file string output file nameUsage

You can also get the flop information as a table (default). For example, for AlexNet, the theoretical flops are:

benanza flopsinfo --model_path //AlexNet/model.onnx| FLOP TYPE | # |

|---|---|

| MultipleAdds | 655622016 |

| Additions | 463184 |

| Divisions | 906984 |

| Exponentiations | 453992 |

| Comparisons | 1623808 |

| General | 0 |

| Total | 1314692000 |

You can also get the theoretical flops of each layer AlexNet.

This is done by passing the --full option and here we pass the --human option to make the output more readable.

benanza flopsinfo --model_path //AlexNet/model.onnx --full --human| LAYERNAME | LAYERTYPE | MULTIPLYADDS | ADDITIONS | DIVISIONS | EXPONENTIATIONS | COMPARISONS | GENERAL | TOTAL |

|---|---|---|---|---|---|---|---|---|

| conv_1 | Conv | 102 MFlop | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 203 MFlop |

| relu_1 | Relu | 280 kFlop | 0 Flops | 0 Flops | 0 Flops | 280 kFlop | 0 Flops | 840 kFlop |

| lrn_1 | LRN | 280 kFlop | 280 kFlop | 560 kFlop | 280 kFlop | 0 Flops | 0 Flops | 1.7 MFlop |

| maxpool_1 | Pooling | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 584 kFlop | 0 Flops | 584 kFlop |

| conv2_2 | Conv | 208 MFlop | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 415 MFlop |

| relu_2 | Relu | 173 kFlop | 0 Flops | 0 Flops | 0 Flops | 173 kFlop | 0 Flops | 519 kFlop |

| lrn_2 | LRN | 173 kFlop | 173 kFlop | 346 kFlop | 173 kFlop | 0 Flops | 0 Flops | 1.0 MFlop |

| maxpool_2 | Pooling | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 332 kFlop | 0 Flops | 332 kFlop |

| conv_3 | Conv | 127 MFlop | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 255 MFlop |

| relu_3 | Relu | 55 kFlop | 0 Flops | 0 Flops | 0 Flops | 55 kFlop | 0 Flops | 166 kFlop |

| conv4_2 | Conv | 96 MFlop | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 191 MFlop |

| relu_4 | Relu | 55 kFlop | 0 Flops | 0 Flops | 0 Flops | 55 kFlop | 0 Flops | 166 kFlop |

| conv5_2 | Conv | 64 MFlop | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 127 MFlop |

| relu_5 | Relu | 37 kFlop | 0 Flops | 0 Flops | 0 Flops | 37 kFlop | 0 Flops | 111 kFlop |

| maxpool_3 | Pooling | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 83 kFlop | 0 Flops | 83 kFlop |

| reshape_1 | Reshape | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 0 Flops |

| gemm_1 | Gemm | 38 MFlop | 4.1 kFlop | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 76 MFlop |

| relu_6 | Relu | 4.1 kFlop | 0 Flops | 0 Flops | 0 Flops | 4.1 kFlop | 0 Flops | 12 kFlop |

| dropout_1 | Dropout | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 4.1 kFlop | 0 Flops | 4.1 kFlop |

| dropout_1 | Dropout | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 4.1 kFlop | 0 Flops | 4.1 kFlop |

| gemm_2 | Gemm | 17 MFlop | 4.1 kFlop | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 34 MFlop |

| relu_7 | Relu | 4.1 kFlop | 0 Flops | 0 Flops | 0 Flops | 4.1 kFlop | 0 Flops | 12 kFlop |

| dropout_2 | Dropout | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 4.1 kFlop | 0 Flops | 4.1 kFlop |

| dropout_2 | Dropout | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 4.1 kFlop | 0 Flops | 4.1 kFlop |

| gemm_3 | Gemm | 4.1 MFlop | 1.0 kFlop | 0 Flops | 0 Flops | 0 Flops | 0 Flops | 8.2 MFlop |

| softmax_1 | Softmax | 0 Flops | 1.0 kFlop | 1.0 kFlop | 1.0 kFlop | 0 Flops | 0 Flops | 3.0 kFlop |

Weight Information

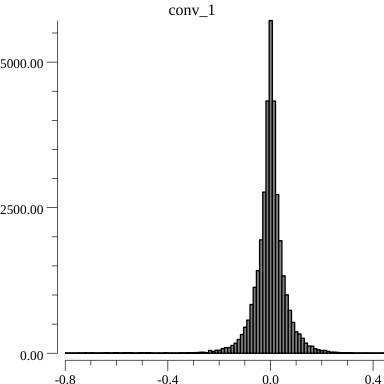

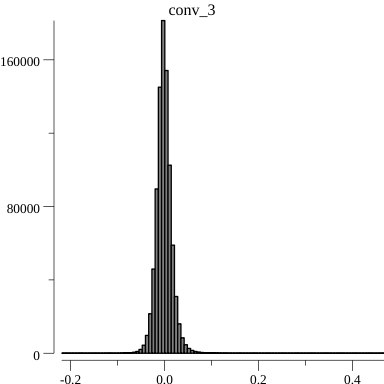

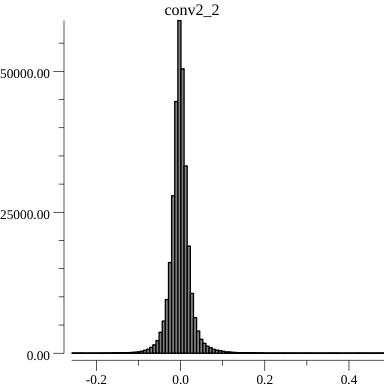

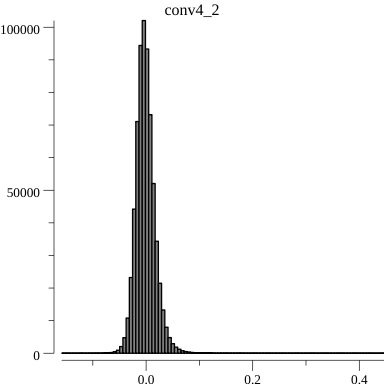

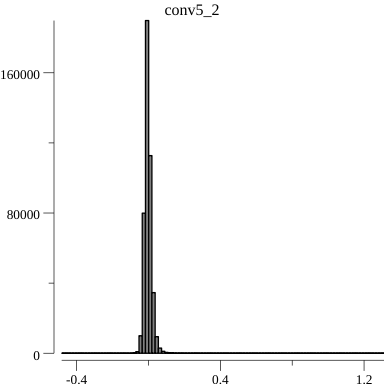

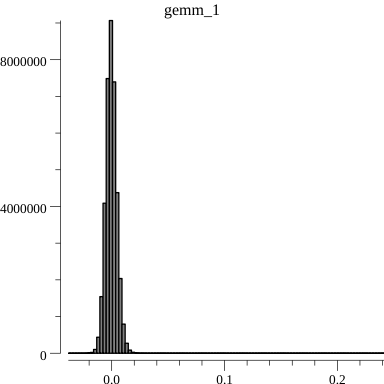

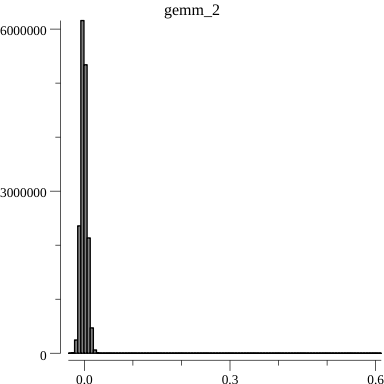

Benanza can examine the weights within the model to compute statistics such as distribution and histogram information. While this is outside the scope of the the paper, it shows that the tool is capable of being extended and generalized to other domains. Here we show how to get weights information for AlexNet

benanza weightsinfo --model_path ///BVLC_AlexNet/model.onnxThe command outputs a table (shown bellow)

| LAYERNAME | LAYERTYPE | LENGTH | LAYERWEIGHTSMAX | LAYERWEIGHTSMIN | LAYERWEIGHTSSDEV |

|---|---|---|---|---|---|

| conv_1 | Conv | 34944 | 0.44255533814430237 | -0.800920307636261 | 0.06429448279744572 |

| conv2_2 | Conv | 307456 | 0.4831761121749878 | -0.2586861848831177 | 0.02538710473291357 |

| conv_3 | Conv | 885120 | 0.46737396717071533 | -0.21888871490955353 | 0.01679956665487417 |

| conv4_2 | Conv | 663936 | 0.4463724195957184 | -0.15847662091255188 | 0.018438743467314958 |

| conv5_2 | Conv | 442624 | 1.3109842538833618 | -0.48335349559783936 | 0.021065162399881654 |

| gemm_1 | Gemm | 37752832 | 0.24130532145500183 | -0.03843037411570549 | 0.004962945202842958 |

| gemm_2 | Gemm | 16781312 | 0.6115601062774658 | -0.03297363966703415 | 0.00794123731339782 |

| gemm_3 | Gemm | 4097000 | 0.40080997347831726 | -0.43072983622550964 | 0.010868346074723081 |

The command also generates figures that show the distribution of weights within the layers